The thinking vegetable

Potatoes, machines, and human consciousness: redefining intelligence and the essence of humanity in the era of artificial intelligence.

What properties would a potato need to possess to be considered conscious?

This intriguing question opens “Mind and Mechanism,” a 1946 article by American psychologist and historian Edwin G. Boring. Boring wasn’t envisioning a Frankenstein-like vegetable; he was laying the groundwork for an inventory of essential psychological functions. His list included memory, learning, insight, attitudes, environmental responsiveness, and the ability to symbolise. According to Boring, any potato with these attributes would, by definition, be conscious. He humorously claimed, “If we could find a potato that matches Socrates in performance, then we would, for all practical purposes, have Socrates.”

Of course, peeling back their skins would reveal stark differences between a potato and Socrates. Boring acknowledged this but insisted psychologists should not concern themselves with an organism’s internal structure. “In science,” he asserted, “things are what they do.” Since mental functions are invisible, Boring believed psychologists must infer them from observable behaviour. This idea formed the foundation of behaviourism—the notion that we can only discern what an organism thinks, believes, or feels by observing its actions. If a baby cries during a thunderstorm, we infer fear; if it reaches for a toy, we infer curiosity.

Boring believed there is an objective measure for every psychological trait. Intelligence is a score on an intelligence test, memory is the number of items recalled after a delay, and thirst is the amount of fluid consumed. When we infer psychological processes from objective measures, Boring’s potato-Socrates analogy begins to make sense. If a potato’s behaviour matches our expectations of a conscious being, it can be considered “conscious,” regardless of what it looks like inside.

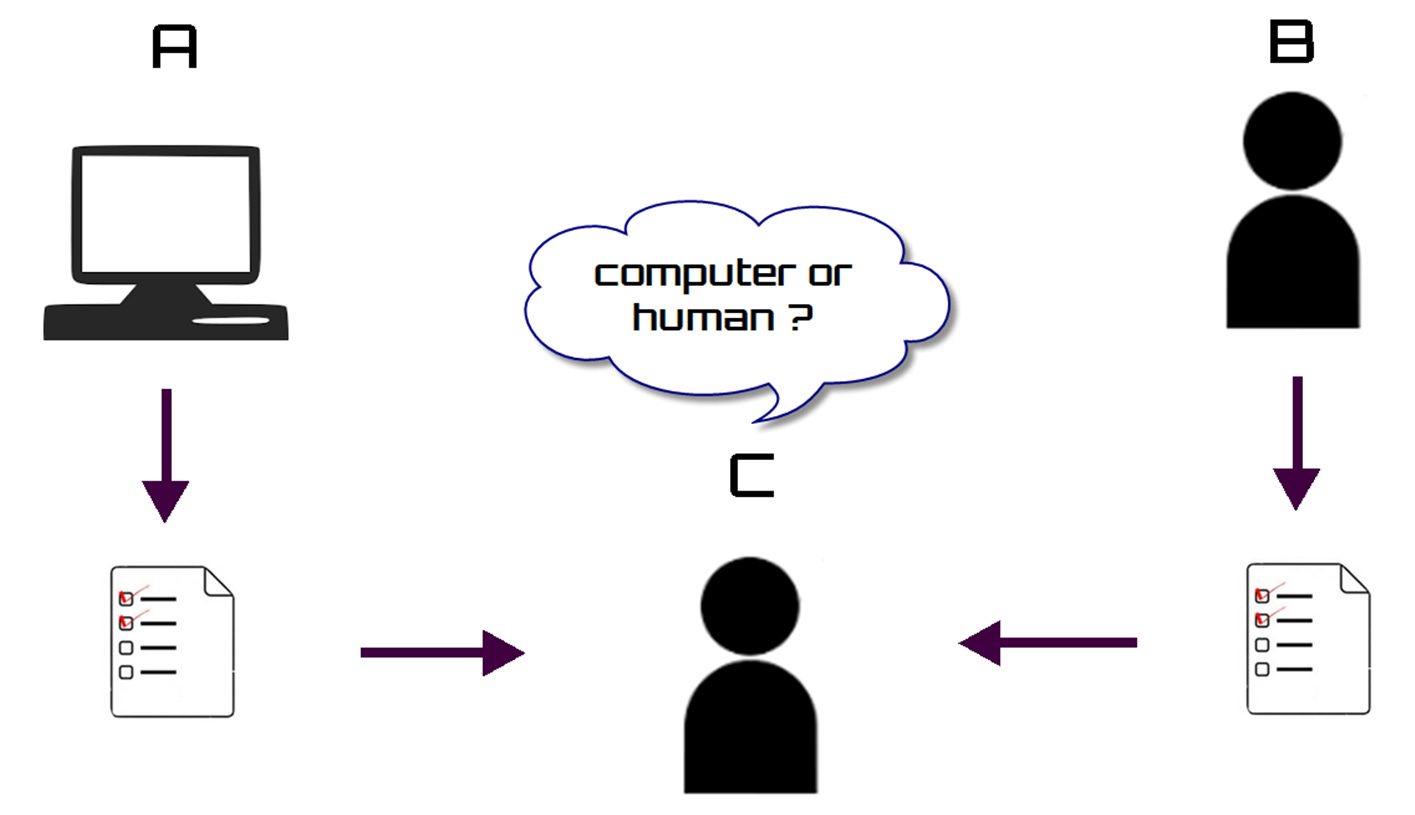

Behaviourist ideas took little time to spread from potatoes to machines. Four years after Boring’s “Mind and Mechanism” was published, the British mathematician and code breaker Alan Turing published “Computing Machinery and Intelligence,” which was devoted to answering the question, “Can machines think?” To answer this question, Turing proposed an “Imitation Game.” Using a teletype terminal, an examiner would communicate with two respondents—one human and one computer—and decide which was based solely on their dialogue. The only forbidden question: “Are you a computer or a human?”

Turing’s Imitation Game

A sample Imitation Dialogue might go:

Question: How much is 23,440 and 34?

Answer: 23,474.

Question: Have you studied history?

Answer: Yes.

Question: When was Scotland united with England?

Answer: 1603.

Question: Are you depressed?

Answer: I never get depressed.

It is no accident that Boring’s and Turing’s papers appeared within a few years of one another. In the 1940s and 1950s, behaviourism reigned supreme over experimental psychology. Turing’s “Imitation Game,” now known as the Turing Test, was an example of strict behaviourist thinking. What goes on inside a potato or a machine is unknowable and, for scientific purposes, irrelevant. If a machine fools a human into thinking it is human, it must be capable of thinking. Simple, right? Well, not quite. In 1950, when he proposed it, Turing’s test was entirely hypothetical; teletype terminals existed, but modern computers had not yet been invented. It would be decades before anyone could conduct a Turing Test.

Behaviourism reached its apotheosis in 1957 with the publication of B.F. Skinner’s Verbal Behaviour. Skinner’s book was an attempt to apply behaviourist principles to language acquisition. Alas, his triumph was short-lived. In 1959, Noam Chomsky reviewed Skinner’s work and delivered a devastating critique, arguing that behaviourism could never explain the complexities of human language. Chomsky’s review is long and detailed, but his criticism of behaviourism can be summed up in one sentence: If I hold a gun to your head and force you to say that black is white, I may have changed your verbal behaviour, but did I really change your opinion? Opinions cannot be seen or measured, but no one would deny they exist. Chomsky showed that any science that limits itself to observable behaviour cannot account for the full complexity of human thought.

Over time, critiques like Chomsky’s became increasingly common, resulting in a marked shift in psychology. Researchers began focusing on internal cognitive processes, such as memory, perception, and problem-solving, recognising that these were crucial to understanding human behaviour. The emergence of cognitive science, which brought together fields like psychology, computer science, linguistics, and neuroscience, studied the mind as an information-processing system, moving beyond behaviourism’s strict confines.

At the same time, the 1960s and 1970s saw significant progress in A.I. research, with pioneers like John McCarthy and Marvin Minsky developing programs that could solve mathematical problems and play games like chess. The 1970s and 1980s introduced “expert systems” capable of medical diagnoses, chemical analyses, and other complex cognitive tasks.

In 1990, inspired by Turing, entrepreneur Hugh Loebner launched an annual “Turing Test” competition. Computer programs called chatbots competed through text-based conversations to convince judges of their humanity, with the most convincing earning a cash prize. Initially, these chatbots were amateurish, providing amusingly off-target responses. Over the next thirty years, advances in natural language processing made some chatbots seem remarkably human-like, but few judges were convinced that clever programming equated to conscious intelligence. Philosopher John Searle captured their concerns in a thought experiment that has come to be known as the “Chinese Room.”

Searle imagined himself in a room with rules for responding to Chinese phrases slipped under the door. By mechanically following the rules, he could send Chinese-sounding responses back. To those outside the room, it seemed like a Chinese speaker was inside. In reality, the person inside had no idea what the phrases meant. Searle argued that his Chinese Room experiment also applied to the Turing Test. A computer could fool a judge by learning to manipulate syntax (grammatical word order) without understanding semantics (meaning). Like Chomsky, Searle concluded that relying on observable behaviour alone cannot demonstrate intelligence.

A giant leap forward in what computers can do came with the advent of machine learning, particularly deep learning. Training neural networks on vast amounts of data improved computer performance on tasks such as image recognition, language translation, and game playing. In 2011, IBM’s Watson made headlines by defeating human champions on the American television quiz show “Jeopardy!” In 2016, Google’s AlphaGo beat the world champion Go player, demonstrating A.I.’s strategic thinking and decision-making prowess. The development of ChatGPT marked a significant milestone in natural language understanding and generation. ChatGPT generates coherent and contextually relevant text, advancing the boundaries of what A.I. can achieve. Indeed, chatting with ChatGPT seems remarkably like conversing with a knowledgeable human being.

A behaviourist might say that ChatGPT shows conscious intelligence; it would certainly pass the Turing Test. There is a catch, however. Every advance in artificial intelligence involves a subsequent revision of what constitutes human intelligence. Diagnosing skin cancer was assumed to require professional expertise until a computer learned how to do it. Almost immediately, the goalposts moved and diagnosing skin cancer was downgraded to mere “pattern matching,” a lower form of thinking. ChatGPT can write a poem, pass a medical licensing examination, and write its own computer code, but many experts refuse to call it intelligent. For these critics, the challenge isn’t just the creation of increasingly clever machines that can mimic human behaviour—it’s about understanding what it means to think in the first place. They believe that intelligence should be evaluated based not simply on conversation but on a broad range of capabilities, including problem-solving, creativity, and the ability to learn and adapt.

In the end, the humble potato, as envisioned by Edwin G. Boring, stands as a poignant metaphor. It beckons us to look beyond superficial behaviours and delve into the deeper mysteries beneath the surface. As we forge ahead in creating and coexisting with intelligent machines, we are continually reminded that the essence of consciousness and intelligence is far more intricate than we ever conceived. Just as we would never equate a potato with Socrates, we must rigorously contemplate the profound nature of thought, the true meaning of consciousness, and, ultimately, the essence of our humanity. In this journey, we are called to confront not just the potential of our creations but the profound depths of our own understanding.

I enjoyed this guided tour that led us into the current hot topic, AI, via the recent history of behaviourism, cognitive behaviourism, the Turing test, and Chomsky (I'd thought that his aha moment was in the late 70s when he made a case for built-in linguistic capacity - I'd thought that was the endpoint of behaviourism as normal science. Now I discover that his dissection of behaviourism began in the 1950s. I've got a lot of sympathy for the potato. You wonder what it might say once we've got the capacity to communicate with its refined streams of thought.